To avoid a single point of failure with your HAProxy. One would set up two identical HAProxy instances (one active and one standby) and use Keepalived to run VRRP between them. VRRP provides for you a virtual IP address to the active HAProxy, and transfers the Virtual IP to the standby HAProxy in case of failure. This is seamless because the two HAProxy instances need no shared state.

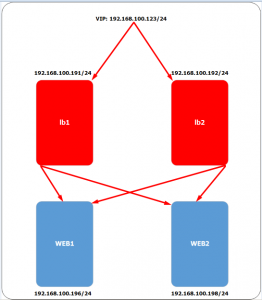

In this example, we are using 2 nodes to act as the load balancer with IP failover in front of our database cluster. VIP will be floating around between LB1 (master) and LB2 (backup). When LB1 is down, the VIP will be taking over by LB2 and once the LB1 up again, the VIP will be failback to LB1 since it hold the higher priority number.

We are using following hosts/IPs:

VIP: 192.168.10.100

LB1: 192.168.10.101

LB2: 192.168.10.102

DB1: 192.168.10.111

DB2: 192.168.10.112

DB3: 192.168.10.113

ClusterControl: 192.168.10.115

You may refer to following diagram for the architecture:

Install HAproxy

1. Login into ClusterControl node to perform this installation. We have built a script to deploy HAproxy automatically which is available under our Git repository https://github.com/severalnines/s9s-admin. Navigate to the installation directory which you used to deploy the database cluster and clone the repo:

| $ cd /root/s9s-galera-2.2.0/mysql/scripts/install

$ git clone https://github.com/severalnines/s9s-admin.git |

2. Before we start to deploy, make sure LB1 and LB2 are accessible using passwordless SSH. Copy the SSH keys to the load balancer nodes:

| $ ssh-copy-id -i ~/.ssh/id_rsa 192.168.10.101

$ ssh-copy-id -i ~/.ssh/id_rsa 192.168.10.102 |

3. Install HAproxy into both nodes:

| ./s9s-admin/cluster/s9s_haproxy –install -i 1 -h 192.168.10.101

./s9s-admin/cluster/s9s_haproxy –install -i 1 -h 192.168.10.102 |

4. You will noticed that these 2 load balancer nodes have been installed and provisioned by ClusterControl. You can verify this by login into ClusterControl > Nodes and you should see similar screenshot as below:

Install Keepalived

Following steps should be performed in LB1 and LB2 accordingly.

1. Install Keepalived package:

On RHEL/CentOS:

| $ yum install -y centos-release

$ yum install -y keepalived $ chkconfig keepalived on |

On Debian/Ubuntu:

| $ sudo apt-get install -y keepalived

$ sudo update-rc.d keepalived defaults |

2. Tell kernel to allow binding non-local IP into the hosts and apply the changes:

| $ echo “net.ipv4.ip_nonlocal_bind = 1” >> /etc/sysctl.conf

$ sysctl -p |

Configure Keepalived and Virtual IP

1. Login into LB1 and add following line into /etc/keepalived/keepalived.conf:

|

vrrp_script chk_haproxy {

script “killall -0 haproxy” # verify the pid existance

interval 2 # check every 2 seconds

weight 2 # add 2 points of prio if OK

}

vrrp_instance VI_1 {

interface eth0 # interface to monitor

state MASTER

virtual_router_id 51 # Assign one ID for this route

priority 101 # 101 on master, 100 on backup

virtual_ipaddress {

192.168.10.100 # the virtual IP

}

track_script {

chk_haproxy

}

}

|

2. Login into LB2 and add following line into /etc/keepalived/keepalived.conf:

|

vrrp_script chk_haproxy {

script “killall -0 haproxy” # verify the pid existance

interval 2 # check every 2 seconds

weight 2 # add 2 points of prio if OK

}

vrrp_instance VI_1 {

interface eth0 # interface to monitor

state MASTER

virtual_router_id 51 # Assign one ID for this route

priority 100 # 101 on master, 100 on backup

virtual_ipaddress {

192.168.10.100 # the virtual IP

}

track_script {

chk_haproxy

}

}

|

3. Start Keepalived in both nodes:

| $ sudo /etc/init.d/keepalived start |

4. Verify the Keepalived status. LB1 should hold the VIP and the MASTER state while LB2 should run as BACKUP state without VIP:

LB1 IP:

|

$ ip a | grep -e inet.*eth0

inet 192.168.10.101/24 brd 192.168.10.255 scope global eth0

inet 192.168.10.100/32 scope global eth0

|

LB1 Keepalived state:

|

$ cat /var/log/messages | grep VRRP_Instance

Apr 19 15:47:25 lb1 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Transition to MASTER STATE

Apr 19 15:47:25 lb1 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Entering MASTER STATE

|

LB2 IP:

|

$ ip a | grep -e inet.*eth0

inet 192.168.10.102/24 brd 192.168.10.255 scope global eth0

|

LB2 Keepalived state:

|

$ cat /var/log/messages | grep VRRP_Instance

Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Transition to MASTER STATE

Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Received higher prio advert

Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Entering BACKUP STATE

|

*Debian/Ubuntu: Under some distributions /var/log/messages might be missing , try to find the similar log under /var/log/syslog.

Installation completed! You can now access your database servers through VIP, 192.168.10.100 port 33306.

![Install Keepalived Following steps should be performed in LB1 and LB2 accordingly. 1. Install Keepalived package: On RHEL/CentOS: $ yum install -y centos-release $ yum install -y keepalived $ chkconfig keepalived on On Debian/Ubuntu: $ sudo apt-get install -y keepalived $ sudo update-rc.d keepalived defaults 2. Tell kernel to allow binding non-local IP into the hosts and apply the changes: $ echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf $ sysctl -p Configure Keepalived and Virtual IP 1. Login into LB1 and add following line into /etc/keepalived/keepalived.conf: vrrp_script chk_haproxy { script "killall -0 haproxy" # verify the pid existance interval 2 # check every 2 seconds weight 2 # add 2 points of prio if OK } vrrp_instance VI_1 { interface eth0 # interface to monitor state MASTER virtual_router_id 51 # Assign one ID for this route priority 101 # 101 on master, 100 on backup virtual_ipaddress { 192.168.10.100 # the virtual IP } track_script { chk_haproxy } } 2. Login into LB2 and add following line into /etc/keepalived/keepalived.conf: vrrp_script chk_haproxy { script "killall -0 haproxy" # verify the pid existance interval 2 # check every 2 seconds weight 2 # add 2 points of prio if OK } vrrp_instance VI_1 { interface eth0 # interface to monitor state MASTER virtual_router_id 51 # Assign one ID for this route priority 100 # 101 on master, 100 on backup virtual_ipaddress { 192.168.10.100 # the virtual IP } track_script { chk_haproxy } } 3. Start Keepalived in both nodes: $ sudo /etc/init.d/keepalived start 4. Verify the Keepalived status. LB1 should hold the VIP and the MASTER state while LB2 should run as BACKUP state without VIP: LB1 IP: $ ip a | grep -e inet.*eth0 inet 192.168.10.101/24 brd 192.168.10.255 scope global eth0 inet 192.168.10.100/32 scope global eth0 LB1 Keepalived state: $ cat /var/log/messages | grep VRRP_Instance Apr 19 15:47:25 lb1 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Transition to MASTER STATE Apr 19 15:47:25 lb1 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Entering MASTER STATE LB2 IP: $ ip a | grep -e inet.*eth0 inet 192.168.10.102/24 brd 192.168.10.255 scope global eth0 LB2 Keepalived state: $ cat /var/log/messages | grep VRRP_Instance Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Transition to MASTER STATE Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Received higher prio advert Apr 19 15:47:25 lb2 Keepalived_vrrp[6146]: VRRP_Instance(VI_1) Entering BACKUP STATE *Debian/Ubuntu: Under some distributions /var/log/messages might be missing , try to find the similar log under /var/log/syslog. Installation completed! You can now access your database servers through VIP, 192.168.10.100 port 33306.](https://ittoday.vn/wp-content/uploads/2021/04/images_1_2.jpg)